Since inception of the human race the idea of being able to perform an action solely on the basis of thought has been an unending quest. Throughout eras scientists have been working either actively or sporadically to explore ways to make this happen. Initial work (in the late 1920s) was involved around discovering the fact that electrical signal produced by the human brain can be detected and recorded from the scalp.

The advent of computers was a significant breakthrough in this area of work, as it lead the way towards what we today know as “Brain Computer Interface (BCI)”. The computational processing aspect of computers now opened up doors to the possibility of being able to analyze and distinguish the patterns of the electrical signals being produced by the human brain, and thus allowing to trigger a desired outcome for a given identified brain signal pattern. For example, when brain signal pattern A is detected by the computer then it could be associated to trigger a tangible action like activating a switch to turn on/off the lights. In simple terms this kind of a setup that detects electrical signals from the brain, and computes these signal patterns to initiate a substantial tangible action is known as a BCI.

BCI has always been a subject matter of interest in the fields of Rehabilitation and Assistive Technology. The potential of BCI is unbounded with regards to improving the lives people with disabilities. For example, BCI based systems could allow an individual with severely restricted or no range of movements (e.g. spinal cord injuries) to drive a power wheelchair, operate household appliances, etc. Or even help someone in a vegetative state to communicate by speaking out the words that the individual would like to say.

Primarily there are two categories of BCI systems – “invasive” and “noninvasive”. Invasive systems interact with the brain directly via electrodes / sensors that are implanted into the brain or its surface. While noninvasive systems interact with the brain indirectly via electrodes / sensors placed on the surface of the head that detect brain signal emissions (e.g. Electro-Encephalography (EEG), functional Magnetic Resonance Imaging (fMRI), and Magnetic Sensor Systems).

|

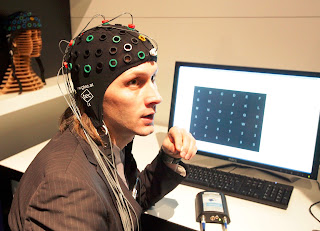

| Example of a noninvasive BCI system in use |

Noninvasive BCI systems usually involve wearing a head cap (aka EEG cap) with multiple holes / slots to put on the electrodes at the relevant areas of the surface of the head to detect and record the electrical signals emitted by the brain. Electro gel is used on the electrodes to improve contact between the scalp and the electrode. Due to the requirement of a gel such electrodes are also known as wet-electrodes. Existing systems can have anywhere between a few to more than a 100 electrodes. The practicalities of using wet electrodes – e.g. drying up of gel, repeated cleaning of electrodes and head skin to setup EEG Cap, irritation of sensitive skin due to application of gel, etc. – does not make them convenient for quick setup and daily use. Over the recent few years this has prompted the development of dry electrodes. Unlike wet electrodes, dry electrodes do not require the use of gel and can be setup direct into the EEG Cap. Although still in the infancy of its developmental cycle, dry electrodes are quickly catching up in terms of detecting high quality brain signals as their wet counterpart. Regular comparison studies are being carried out to evaluate the performance of Wet vs. Dry Electrodes within the context of EEG based noninvasive BCI.

|

| Quadriplegic Woman Controlling Robotic Arm with Thought using an Invasive BCI System |

Currently invasive BCI systems are mostly implemented within controlled research oriented environments. Rehabilitation and Assistive Technology applications are at the forefront of the research being conducted in this area. Recent examples of breakthroughs include enabling a Paralyzed Woman to Steer a Robotic Arm with her Mind and Sip Coffee and Controlling a Prosthetic Arm with Thought to perform generic tasks. Both these cases required a computer chip to be implanted in the user’s brain. This chip is programmed to collect the electrical signals from the brain and translate them into actions to be carried out by the robotic arm.

Noninvasive EEG based BCI systems have also been a sizzling area of development. Due to the non-surgical nature of setup noninvasive systems are being experimented to be utilized for a wider range of applications. The Brainable Project is an example of noninvasive BCI systems being employed to control home automation systems by people with severe disabilities. Noninvasive BCI systems have also managed to penetrate into the mainstream market, for the most part in the gaming industry. The Emotiv EPOC is a noninvasive EEG mainstream BCI interface that is sold with an optional SDK kit to develop customized applications. The EPOC also comes with a number of readily available applications such as emotion / mood recognition, and virtual games. Similarly, BCI technology from NeuroSky has managed to incorporate its application into a number of devices like media players, video games, and the latest being a fluffy wearable pair of cat ears that responds to the emotional state of the user.

The future of BCI looks promising with immense positive impact on improving the lives of people with disabilities. Both invasive and noninvasive BCI technologies possess their own set of current practical drawbacks. Invasive BCI requiring a surgical procedure to implant sensors and the dependency of noninvasive BCI on wet electrodes to detect a good quality brain signal make them inconvenient of day-to-day use. The entry of noninvasive BCI into the games market has been an encouraging aspect of development. Although in its early stages at present, the future seems to be geared towards BCI being seamlessly integrated into video-games with industry giants like Sony (Playstation), and Nintendo (Wii) looking to find new ways of interaction and controls for gamers. The widespread usage of BCI will result in improved hardware and extensive application of such technology. The work in the field of BCI has evolved enough to continue maturing into a fully developed solution. The question is how much longer will it take for such a breakthrough technology to be perfected?

Sources:

• Brain-Computer Interfaces: Revolutionizing Human-Computer Interaction, B. Graimann, B. Allison, G. Pfurtscheller, 2010

• Brain-Computer Interfaces: Principles and Practice, Jonathan R. Wolpaw, Elizabeth W. Wolpaw, 2012

• Brain-Computer Interfaces: An International Assessment of Research and Development Trends, Theodore W. Berger, John K. Chapin, Greg A. Gerhardt, Dennis J. McFarland, Jose C. Principe, Walid V. Soussou, Dawn M. Taylor, Patrick A. Tresco, 2008

• Simultaneous EEG Recordings with Dry and Wet Electrodes in Motor-Imagery – http://mlin.kyb.tuebingen.mpg.de/BCI2011JS.pdf

• Paralyzed Woman Steers Robotic Arms With Mind And Sips Coffee – http://www.assistivetechnologyblog.com/2012/05/paralyzed-woman-steers-robotic-arms.html

• Controlling A Prosthetic Arm With Thought – http://www.assistivetechnologyblog.com/2012/12/controlling-prosthetic-arm-with-thought.html

• The Brainable Project - http://www.brainable.org/en/Pages/Home.aspx

• Necomimi brain-activated cat ears hit the U.S. – http://www.gizmag.com/necomimi-brain-activated-cat-ears/23302/